The index to the articles in this series is found here.

I got my larger data set, almost 5 years of radar data. This took about 40 hours on all cores of my home machine to convert into the intermediate binary format, but then I was able to start experimenting.

I mentioned in the last article that I had been a bit concerned about the overlapping in time of the training, validation, and holdout sets. Since I only had a handful of months of data, and rain only happens a few times a week, I decided that this was how I would break up my data set. With the new multi-year dataset, though, I could make truly independent training, validation, and holdout sets, from separate years.

And… it turns out I was right to be concerned about that overlap. With the disjoint data sets, the network can be seen to be almost immediately overtraining. That is, it is not getting any better at predicting the outcome from new data, it’s getting worse. Typically, this results in a prediction success rate of about 60% now.

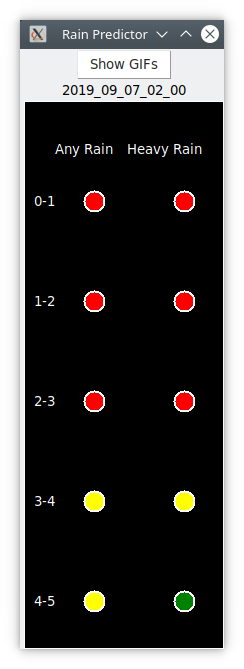

Of course, the desktop widget that I have been using has shown its usefulness, even though it was trained and validated on overlapping data. This means that that network was overtrained, and probably doing more poorly in the predictions I was using it for.

So, how do we address the overtraining? I’ve changed the geometry somewhat to start with. Now, instead of feeding the data into the LSTM layer, I first feed it into a geometry layer that takes the 800+ inputs and produces a smaller number of outputs to feed into the LSTM layer. I apply batch normalization to that layer, and a 50% dropout, before going on to LSTM. After LSTM, another 50% dropout, then into another normalized dense layer, then finally the output layer. I cut way back on the sizes of my layers, instead of hundreds of nodes per layer, I’m down to tens.

Even with all that, the network overtrains immediately. I have to find a better way to design the network to capture generic features, rather than specific details.

Early on, when the scope of the problem became apparent, I said I was not going to use convolutional layers. The size of the data appeared to make that impractical. I’m now going back and thinking about that decision. I have some ideas for how I can apply convolutions without straining the memory of the machine. I was thinking of doing it in polar coordinates, the way the preprocessed data in the intermediate binary files is implicitly stored, but I think I’ll stick with cartesian features for now. The intermediate binary files do contain a raw representation of the rain in a radar image, in addition to the preprocessed vectors, and so I can do something with that before saving the numpy arrays to disc for easy loading and reuse.