The index to the articles in this series is found here.

Well, we’re about to start training the network, but it’s time to pause a bit and talk about our data. We will need training and validation data sets, for one. Confusingly, the keras documentation sometimes refers to the validation set as the test set, while common use does distinguish the two terms.

I’m going to take a short aside now to talk about another data set. I mentioned early on that this project doesn’t really make use of feature selection. Other projects, however, will. If you are performing feature selection before setting up your network, it turns out there’s a slightly subtle source of error that can creep in. If your feature selection is performed on your validation data you will introduce statistical biases. In effect, if feature selection identifies a feature that turns out to be a statistical anomaly, not a true feature of the data, then validating on that data set it will tend to reinforce the anomaly. If you keep the data sets separate, then the validation will not be weighted to support the anomaly, and you expect the measurements to revert to the mean.

OK, back to the rain predictor. So, I wrote a short script to split my data, called datasplit.py:

#! /usr/bin/python3

# Split the candidates file into training and validation sets.

import argparse

import random

parser = argparse.ArgumentParser(description='Split data into '

'training and validation sets.')

parser.add_argument('--candidates', type=str, dest='inputfile',

required=True,

help='Path of the candidates file produced '

'by get-training-set.py')

parser.add_argument('--veto-set', type=str, dest='vetoset',

help='If supplied, its argument is the name '

'of a file containing candidates that '

'are to be skipped.')

parser.add_argument('--training-file', type=str, dest='trainingFile',

required=True,

help='The pathname into which training data '

'will be written.')

parser.add_argument('--validation-file', type=str, dest='validationFile',

required=True,

help='The pathname into which validation data '

'will be written.')

parser.add_argument('--validation-fraction', type=float,

dest='validationFrac', default=0.2,

help='The fraction of candidates that will be '

'reserved for validation.')

parser.add_argument('--validation-count', type=int,

dest='validationCount', default=0,

help='The number of candidates that will be '

'reserved for validation. '

'Supersedes --validation-fraction.')

args = parser.parse_args()

vetoes = []

if args.vetoset:

with open(args.vetoset, 'r') as ifile:

for record in ifile:

fields = record.split()

vetolist.append(int(fields[0]))

validcandidates = []

trainingset = []

validationset = []

with open(args.inputfile, 'r') as ifile:

for record in ifile:

fields = record.split()

if int(fields[0]) in vetoes:

continue

validcandidates.append(record)

random.shuffle(validcandidates)

numValid = len(validcandidates)

numReserved = 0

if args.validationCount == 0:

numReserved = int(args.validationFrac * numValid)

else:

numReserved = args.validationCount

with open(args.trainingFile, 'w') as ofile:

trainingset = validcandidates[numReserved:]

trainingset.sort()

for line in trainingset:

ofile.write('{}'.format(line))

with open(args.validationFile, 'w') as ofile:

validationset = validcandidates[:numReserved]

validationset.sort()

for line in validationset:

ofile.write('{}'.format(line))

This gets us our training set and our validation set. We take a random subset of the candidates out for validation, and keep the rest for training. I’ve chosen an 80% / 20% split because I don’t have such a very large amount of data yet. With a larger dataset, I’d keep a similar number of samples for validation, so the fraction used for validation would drop.

Now, I mentioned in an earlier article that I wanted to reduce the number of no-rain candidates, clear skies now giving clear skies later. I considered those uninteresting. Why didn’t I do the same for the converse case? Rain now means there will probably be rain 10 minutes from now. Well, I am interested in answering the question “if it’s raining now, when will it stop raining?” For that, I need those data elements.

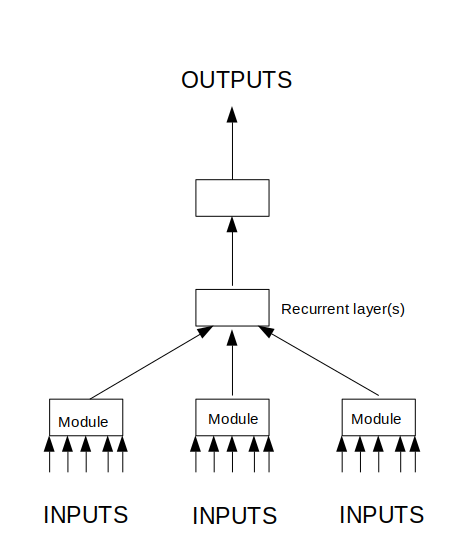

Next, our loss function. As the network trains, it has to establish an error metric that can be fed into the backpropagation step. I’ve got 10 independent binary fields. Well, not entirely independent, we never set the heavy rain bit when the rain-at-all bit is zero, but I’m going to ignore that subtlety for now. The loss function we want to use, then, is binary_crossentropy. We’re not looking for a one-hot solution, where only one bit is set. Next, though, we can talk about weights.

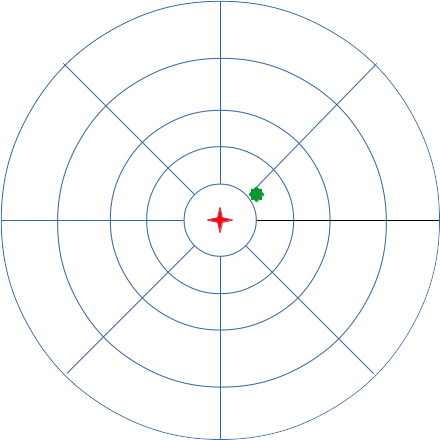

There are two measures that I’m really interested in, known as specificity and recall. Each is a fraction from 0 to 1. A high specificity indicates a low rate of false negatives, and a high recall indicates a low rate of false positives. I’m interested in high specificity, I’d rather be told it will rain, and then have it not rain, than go out thinking there wasn’t going to be rain, and getting soaked. So, by manipulating the weights, or by writing a custom loss method, I’m going to be trying to adjust the loss to favour specificity, even at a possible cost in lower recall.